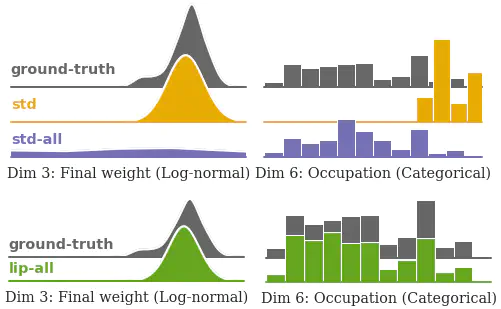

Illustrative example of unbalanced learning.

Illustrative example of unbalanced learning.Abstract

Probabilistic learning is increasingly being tackled as an optimization problem, with gradient-based approaches as predominant methods. When modeling multivariate likelihoods, a usual but undesirable outcome is that the learned model fits only a subset of the observed variables, overlooking the rest. In this work, we study this problem through the lens of multitask learning (MTL), where similar effects have been broadly studied. While MTL solutions do not directly apply in the probabilistic setting (as they cannot handle the likelihood constraints) we show that similar ideas may be leveraged during data preprocessing. First, we show that data standardization often helps under common continuous likelihoods, but it is not enough in the general case, specially under mixed continuous and discrete likelihood models. In order for balance multivariate learning, we then propose a novel data preprocessing, Lipschitz standardization, which balances the local Lipschitz smoothness across variables. Our experiments on real-world datasets show that Lipschitz standardization leads to more accurate multivariate models than the ones learned using existing data preprocessing techniques.