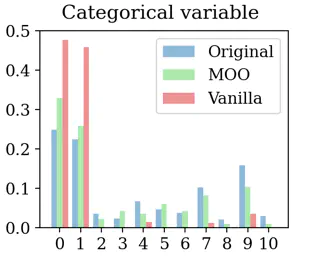

Data reconstruction on a discrete variable.

Data reconstruction on a discrete variable.Abstract

Variational autoencoders (VAEs) have been successfully applied to complex input data such as images and videos. Counterintuitively, their application to simpler, heterogeneous data—where features are of different types, often leads to underwhelming results. While the goal in the heterogeneous case is to accurately approximate all observed features, VAEs often perform poorly in a subset of them. In this work, we study this feature overlooking problem through the lens of multitask learning (MTL), relating it to the problem of negative transfer and the interaction between gradients from different features. With these new insights, we propose to train VAEs by leveraging off-the-shelf solutions from the MTL literature based on multi-objective optimization. Furthermore, we empirically demonstrate how these solutions significantly boost the performance of different VAE models and training objectives on a large variety of heterogeneous datasets.